Oxide at Home: Propolis says Hello

So Oxide is making some cool stuff huh? Big metal boxes with lots of computer in them. Servers as they should be! Too bad I can’t afford to buy one for myself… but wait, they’re open-sourcing the software they’re writing to do it. Mom said we can have Oxide at Home!

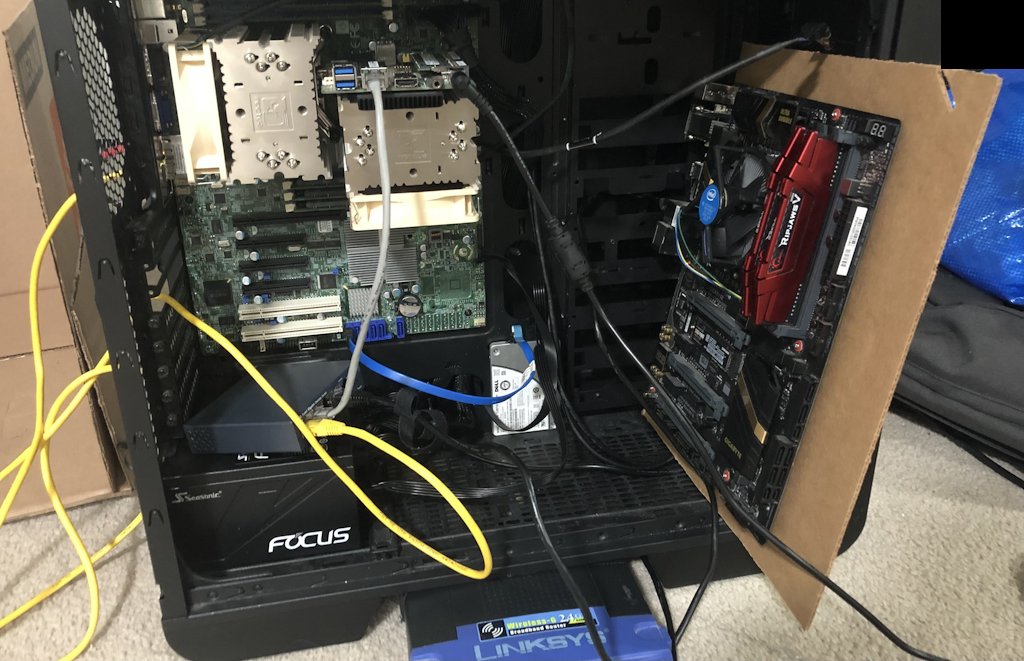

Oxide at Home:

Hardware, with the software haphazardly jammed in

Let’s be clear, I’m not aiming for elegance here. I’m not aiming for enterprise grade either. I want something dirty, something hacky, something that makes you go “what the fuck, why, no???????”.

To that end I’m choosing right at the start to make my life more interesting. Oxide’s software is mostly written for illumos, a direct descendant of OpenSolaris. There’s a handful of illumos distributions out there, but Oxide develops primarily for their distribution called Helios. Their Omicron README (no relation) also mentions OmniOS. Naturally I’m going to use neither of those and make it work on OpenIndiana instead.

You see, I can’t get a copy of Helios right now unless I commit corporate espionage, and OmniOS describes itself as “enterprise”. As I’ve already stated, I am not an enterprise, nor do I plan on becoming one unless Jean-Luc Picard starts taking estrogen and wants to be my captain. Tribblix was also in the running but I couldn’t get the installer to work, so I landed on OpenIndiana.

Anyways, everything I do, I’ll do with the intention of getting it working, not making it good. Expect awful things along the way.

But first, we need to summon Ferris

Oxide, as their name implies, likes to write software in rust. Some of that software wants to use a nightly rust too. Might help to have rustup huh? Well, there’s a couple problems. First, rustup’s install script is not actually as universal as they think it is:

vi@box:~$ curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

sh[455]: local: not found [No such file or directory]

sh[456]: local: not found [No such file or directory]

sh[457]: local: not found [No such file or directory]

sh[458]: local: not found [No such file or directory]

sh[202]: local: not found [No such file or directory]

sh[62]: local: not found [No such file or directory]

sh[65]: local: not found [No such file or directory]

sh: line 72: _ext: parameter not set

Fine, whatever, let’s pipe it to bash then,

vi@box:~$ curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | bash

ld.so.1: rustup-init: fatal: libgcc_s.so.1: open failed: No such file or directory

Excuse me the fuck? I uh,

vi@box:~$ find / -name 'libgcc_s.so.1'

/usr/sfw/lib/libgcc_s.so.1

/usr/sfw/lib/amd64/libgcc_s.so.1

/usr/pkgsrc/lang/rust/work/rust-1.55.0-x86_64-unknown-illumos/lib/pkgsrc/libgcc_s.so.1

/usr/gcc/7/lib/libgcc_s.so.1

/usr/gcc/7/lib/amd64/libgcc_s.so.1

/usr/gcc/11/lib/amd64/libgcc_s.so.1

/usr/gcc/11/lib/libgcc_s.so.1

/usr/gcc/3.4/lib/libgcc_s.so.1

/usr/gcc/3.4/lib/amd64/libgcc_s.so.1

/usr/gcc/10/lib/libgcc_s.so.1

/usr/gcc/10/lib/amd64/libgcc_s.so.1

What do you want from me, rustup? Well you see, it’s very simple:

vi@box:~$ pkg search file:basename:libgcc_s.so.1

INDEX ACTION VALUE PACKAGE

basename file usr/gcc/8/lib/amd64/libgcc_s.so.1 pkg:/system/library/gcc-8-runtime@8.4.0-2020.0.1.2

basename file usr/gcc/8/lib/libgcc_s.so.1 pkg:/system/library/gcc-8-runtime@8.4.0-2020.0.1.2

[... a bunch of other gcc versions skipped ...]

basename file usr/lib/amd64/libgcc_s.so.1 pkg:/system/library/gcc-4-runtime@4.9.4-2021.0.0.8

basename file usr/lib/libgcc_s.so.1 pkg:/system/library/gcc-4-runtime@4.9.4-2021.0.0.8

We need gcc-4-runtime! Obviously (/s). Oh we also need g++-4-runtime or we get another missing shared library but I’ll spare you the details.

vi@box:~$ sudo pkg install gcc-4-runtime g++-4-runtime

vi@box:~$ curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | bash

info: downloading installer

Welcome to Rust!

FINALLY. Ok.

What’s a Propolis?

Oxide is making racks of lots of computer. My understanding is that they have a control plane that talks to all the sleds (the blades of computer). Each sled runs a sled agent, and one thing that sled agent can do is start virtual machines. This is where Propolis comes in, as a userspace frontend to the bhyve hypervisor.

This is a great place for us to start because Propolis doesn’t depend on any other services to run. It just sits there and exposes an API to make VMs.

Let’s build it!

vi@box:~/oxide-at-home$ git clone https://github.com/oxidecomputer/propolis

vi@box:~/oxide-at-home$ cd propolis

vi@box:~/oxide-at-home/propolis$ cargo build

This will make target/debug/propolis-cli and target/debug/propolis-server. I copied those over to /usr/local/bin and moved on with my life, just get them on your PATH somehow if you’re following along at home.

Configuring Propolis

Anyway, how do we use this? First we need a config file, and the README provides this helpful example:

bootrom = "/path/to/bootrom/OVMF_CODE.fd"

[block_dev.alpine_iso]

type = "file"

path = "/path/to/alpine-extended-3.12.0-x86_64.iso"

[dev.block0]

driver = "pci-virtio-block"

block_dev = "alpine_iso"

pci-path = "0.4.0"

[dev.net0]

driver = "pci-virtio-viona"

vnic = "vnic_name"

pci-path = "0.5.0"

First question - what the hell is OVMF_CODE.fd? I did a pkg search for it and not a single package has it, but it’s the bootrom used when the VM starts up. Comes from a project called EDK2 I guess? I’m fuzzy on the details, but I followed a trail from the arch linux edk2-ovmf package to this github wiki and eventually this jenkins build artifact folder on the personal website of a qemu dev.

I grabbed the x64 rpm, extracted it a few times with 7zip, and eventually got my hands on OVMF_CODE-pure-efi.fd. This ended up working out so, cool I guess.

EDIT: I have since been informed that the Propolis README has a link to a recommended bootrom. As you’ll soon see, my propensity for not reading READMEs all the way through knows no bounds. I pretty much just copied the example config file out and decided I’d come back to the README if I ran into a problem I couldn’t solve, and unfortunately I’m very good at solving problems. Sorry Oxide folks, thanks for putting up with my bullshit <3.

Next, I downloaded a copy of the alpine-extended iso for 3.15 since that’s the latest right now.

Finally, you see that vnic = line? We need to give it a vnic-type network interface. The README actually explains the correct way to do this but I didn’t bother to read that. I just read the man page and threw stuff at the terminal until it did something useful.

vi@box:~/oxide-at-home$ dladm show-link

LINK CLASS MTU STATE BRIDGE OVER

e1000g1 phys 1500 down -- --

e1000g0 phys 1500 up -- --

vi@box:~/oxide-at-home/propolis$ sudo dladm create-vnic -l e1000g0 propolis

dladm: invalid link name 'propolis'

vi@box:~/oxide-at-home/propolis$ sudo dladm create-vnic -l e1000g0 e1000g9

vi@box:~/oxide-at-home$ dladm show-link

LINK CLASS MTU STATE BRIDGE OVER

e1000g1 phys 1500 down -- --

e1000g0 phys 1500 up -- --

e1000g9 vnic 1500 up -- e1000g0

So this totally breaks naming conventions but I couldn’t figure out what constitutes a “valid link name” from the man page. If I had actually read the README more I would have seen the suggestion of vnic_prop0. You should use that instead! But my config will use my best effort shitpost name instead, since that’s what really happened.

With all that done, my final config file looks a bit like this:

bootrom = "/export/home/vi/oxide-at-home/edk2/usr/share/edk2.git/ovmf-x64/OVMF_CODE-pure-efi.fd"

[block_dev.alpine_iso]

type = "file"

path = "/export/home/vi/oxide-at-home/run/alpine-extended-3.15.0-x86_64.iso"

[dev.block0]

driver = "pci-virtio-block"

block_dev = "alpine_iso"

pci-path = "0.4.0"

[dev.net0]

driver = "pci-virtio-viona"

vnic = "e1000g9"

pci-path = "0.5.0"

Using Propolis

With a config file that looked good and all the hubris of a university student on orientation day I started the propolis server.

vi@box:~/oxide-at-home/run$ sudo propolis-server run propolis.toml 127.0.0.1:12400

In another terminal I told propolis to make a VM.

propolis-cli -s 127.0.0.1 new cirno -m 1024 -c 1

Peeking over at the propolis logs, I saw this:

Mar 13 22:36:20.531 INFO Starting server...

Mar 13 22:36:56.204 INFO accepted connection, remote_addr: 127.0.0.1:46363, local_addr: 127.0.0.1:12400

Mar 13 22:36:56.210 INFO request completed, error_message_external: Internal Server Error, error_message_internal: Cannot build instance: No such file or directory (os error 2), response_code: 500, uri: /instances/3915cdd5-3998-4f42-b728-0f8b594afae0, method: PUT, req_id: 0535a501-4467-4f4d-8da5-029e5ed26a20, remote_addr: 127.0.0.1:46363, local_addr: 127.0.0.1:12400

What do you MEAN “No such file or directory”?????

I tried poking at the code but that went nowhere fast. My usual debugging strategy here is to use strace but we’re in illumos land so we need to use dtrace instead, which is like if someone (Bryan Cantrill) decided strace needed awk built in. Now, I’m usually content to just pipe the whole firehose of strace into awk and filter from there but dtrace is actually pretty neat, if a bit confusing at first. And it’s got a pony. Does strace have a pony? I don’t think so.

I wanted to see all openat invocations, so I grabbed the probe id.

vi@box:~/oxide-at-home/run$ sudo dtrace -l | grep openat

22431 fbt genunix openat entry

22432 fbt genunix openat return

Then I ran propolis-server with dtrace and tried to make another VM.

vi@box:~/oxide-at-home/run$ sudo dtrace -i '22431 { printf("%s", copyinstr(arg1)) }' -c 'propolis-server run /export/home/vi/oxide-at-home/run/propolis.toml 127.0.0.1:12400' 2>&1 | grep -v -e '/proc' -e '/etc/ttysrch' -e /var/adm/utmpx -e '/dev/pts/3'

dtrace: description '22431 ' matched 1 probe

Mar 13 23:15:02.407 INFO Starting server...

Mar 13 23:15:04.980 INFO accepted connection, remote_addr: 127.0.0.1:40237, local_addr: 127.0.0.1:12400

Mar 13 23:15:04.983 INFO request completed, error_message_external: Internal Server Error, error_message_internal: Cannot build instance: No such file or directory (os error 2), response_code: 500, uri: /instances/b745d636-c8b6-46e5-bb08-839af892b702, method: PUT, req_id: 38dc08e3-e2d5-4561-a328-f48984011a8f, remote_addr: 127.0.0.1:40237, local_addr: 127.0.0.1:12400

13 22431 openat:entry /var/ld/64/ld.config

13 22431 openat:entry /usr/lib/64/libsqlite3.so.0

[... snip bunch of random dlls ...]

13 22431 openat:entry /etc/certs/ca-certificates.crt

4 22431 openat:entry /dev/vmmctl

Hmm what’s /dev/vmmctl? Ha. haha. Remember how I said Propolis is a frontend for bhyve? That’s the bhyve control device. Does it exist?

vi@box:~/oxide-at-home/run$ ls /dev/vmmctl

/dev/vmmctl: No such file or directory

No, no of course it doesn’t, because I forgot to install bhyve. Let’s do that shall we?

vi@box:~/oxide-at-home/run$ sudo pkg install system/bhyve bhyve/firmware brand/bhyve system/library/bhyve

Using Propolis, for real this time

I restarted Propolis, and finally, FINALLY, we can create a VM.

$ vi@box:~/oxide-at-home/run$ propolis-cli -s 127.0.0.1 new cirno -m 1024 -c 1

We have to explicitly turn on VMs after they’re created, and then we can interact with them over serial. propolis-cli can give us a serial connection to the VM, but here I ran into a little snag. The alpine image we’re using attaches the console to VGA by default, so I had to attach to serial first in one terminal, start the VM up in the other, then switch back to the serial connection to stop grub from autobooting.

$ vi@box:~/oxide-at-home/run$ propolis-cli -s 127.0.0.1 serial cirno

$ vi@box:~/oxide-at-home/run$ propolis-cli -s 127.0.0.1 state cirno run

[ grub appears on the serial connection ]

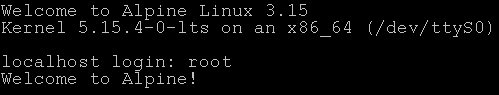

Once grub came up I removed quiet from the linux arguments and added console=ttyS0. I hit the button to boot the system, and at long last, I had victory:

This is where I stopped, to prevent my brain from melting.

What’s next?

I’m not sure! I think sled-agent is the logical next step as we work our way from the ground up trying to build out a fully working deployment (for some definitions of “working” and “deployment”) but we’ll see.

Or maybe I’ll build a server rack out of cardboard. You never know.